Technical: AI Fixed 150,000 Lot Numbers In VAERS Data

Data Cleaning

<= Overview page

This technical page describes some of the more technical details about the code.

Latest update Sept 27, 2022

Latest version inputs and outputs

This is the tame version, meaning ...

Settings can make this more aggressive where more of an effort is made to find a match to those which occur in groups with more/many in that group being joined to. For those that matched to a known/validated code, sometimes the group count is just 1. To run the more aggressive mode, simply set tame_mode to 0 in cleaning.py.

Keep in mind it is nearest match and the amount of good vs bad in looking through the results as you’ll spot some flaws instantly.

Input/Run

How to run the data cleaning code by yourself

If not already present, install Python from ActiveState or any of the other sources

Create a folder, I call it `cleaning` (doesn’t matter) under a folder called `vaers` (doesn’t matter)

Grab the latest CDC data, download `All Years Data` zip file from https://vaers.hhs.gov/data/datasets.html

Unzip all files 2020 upward plus those called `NonDomestic` (reports from outside USA)

Run it.

C:\Users\g\Documents\_covid\_vaers\cleaning> cls & python cleaning.py(where cls first clears the screen)

The first time you run the program, several things will happen automatically. Adding some functions to two python modules, also installing them if not already present, and coalescing the data into a single CSV file with just COVID records. Don’t be scared, so long as the smoke stays inside the chips we’re good.

(As an example of how those data files work, those made available Apr 22, 2022 on Friday morning contain lines in the csv (Comma-Separated Values, essentially just text) files with dates up to the previous Friday, Apr 15, 2022. The zip file (compressed for quicker download) is 520 MB and contains reports for all vaccinations of any type but the COVID records are extracted automatically by this program to a single smaller csv file the first time it is run, somewhere around 1.4 MB with 1.23 million rows/records and growing, taking up 2.5 GB in memory when loaded by this program) as of April 2022.

To update in any week, download the new vaers zip file from their website, overwrite the extracted csv files in the cleaning folder, remove or rename the single combined file (VAERS_COMBINED_COVID.csv) and run `cleaning.py` again.

Output

Four files:

1. CSV with settings in the filename and output values like the number of unique lot numbers. The filenames look like

01__jnee_0.18__,jnr_0.24__rowsfix_161484__ptrnchng_75768__unq_4404__cnf_15__num_6__ctoffs_0.66_0.77_0.88.csv

2. A copy of the py file that created it, to save that code (versus any changes later), with the same name but .py extension.

3. Another CSV file with the `Cleaned` column from each run for review, called real_run_comparisons.csv if save_runs_to_single_csv is on.

4. Codes sidelined are saved in a file like this with their count: 01_487546_rows_empties_and_junk.csv

During the run, the list of codes not matched in the screen output can be useful if print_not_matched is on.

Columns

Important note for those doing data analysis:

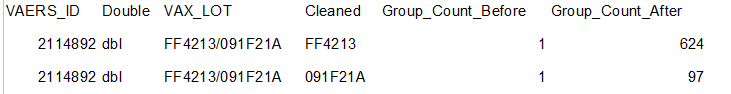

The section of code called multi_lots finds where either two lot codes were entered in one field like FF4213/091F21A or where codes can be extracted from the SYMPTOM_TEXT entries. It creates additional records with the same VAERS_ID for those. That could be a problem for you to work around with your current processes. About 19,000 of those.

The Multi column indicates when more than one code was found.

Ori_Length is a column I use for development that can be deleted.

Severity columns have various scorings applied within the code that can be modified. Sev before and after are averages of those that all have the same code. Since the counts change, a lot’s overall severity can change (Sev_Diff). You can adjust the way those are scored, within the code. For example, I have Died set to 4, disabled at 3, etc.

Expiry is the lot code expiration date determined by the source indicated in the Expiry_Accepted_Source column, when applicable, one of five sources currently, from the expiration_dates.csv file.

Gndr is gender (or SEX in the original).

Match_Ratio: Scorings returned by the various spell check methods but then sometimes modified and later normalized 0 to 1.

Confidence: Overall score when changes were made, normalized 0 to 100.

Changes order: VAX_LOT - > Clean_First - > Clean_Second - > Cleaned

(Cleaned means cleanest rather than a guarantee of cleanliness perfection)

Manu_After: Assigned manufacturer based on pattern (including Astrazeneca).

Codes like 025J20-2A

Lots like 025J20-2A check out as valid but are changed to the canonical pattern 025J20A for run simplicity. You could change them back even manually fairly quickly. But the expirations would not be valid at that point unless you also change those manually from the expiration_dates.csv file. A solution is needed, perhaps those 21 currently could be automatically switched back at the end of the run (021B21A-1, 084D21A-1, 025J20-2A, 029K20-2A, 039K20-2A, 001J21-2A, 003J21-2A, 003K21-2A, 007J21-2A, 007K21-2A, 008B21-2A, 016J21-2A, 033K21-2A, 042B21-2A, 052K21-2A, 205D21-2A, 219C21-2A, 020B22-2A, 058A22-2A, 063B22-2A, 075B22-2A) and then the question is … what would happen regarding typo corrections involving them?

Tools

In case anyone’s interested, these are some tools I use and find handy

Notepad++ (main code-writing)

PyCharm (debugger environment)

Paintshop Pro (very old version, like 90s, simple)

CompareIt (comparing outputs)

CMD (Windows command prompt)

Search & Replace (mostly just for search)

LibreOffice (OpenOffice seems defunct)

Numerous browsers for different tasks

When I have a question my first go-to is a search like …

cls site:stackoverflow.com

Data Size

Options like remove_junk_and_empty_lots especially will need to be ON in most cases to avoid the growing data from being cropped on opening the csv file in Office, LibreOffice etc with their limit of 1,048,576 rows. Currently starting at 1,261,117 rows and resulting in 774,268 rows, having sidelined 486,849 (available in the separate csv file for them).

Doing great in life and could donate to my work?

I’m not asking for money from regular people but if you’re blessed with lots of extra money in life, you can make it possible for me do more of this type of thing.

Otherwise I’ll need to go to work for some company to make money.

For example if you bought a few thousand bitcoin for a few dollars each back in the day and could share some of those, it would be life-changing for the better here.

I will appreciate you forever.

Paypal: At the email below

Bitcoin: bc1qu7r6lztn97m5mqs8dcc936pnafnjyewsnwqtwh

To communicate …

Thanks much, Gary! I've downloaded and done some stats on the VAERS data several times, and I just ignored what I thought was just crap in the lot field. I'll run your stuff and get back to you. Jessica Rose is downloading and processing the VAERS data each Friday, and I think she's also using python. Todd Harvey

Can you please subscribe to Match Your Batch substack so we can touch base? I'm interested in adding your work to matchyourbatch.org, but I have some questions